Imagine you want to gather a large amount of data from several websites as quickly as possible, will you do it manually, or will you search for it all in a practical way?Now you are asking yourself, why would you want to do that! Okay, follow along as we go over some examples to understand the need for web scraping:

Introduction

- Wego is a website where you can book your flights & hotels, it gives you the lowest price after comparing 1000 booking sites. This is done by web scraping that helps with that process.

- Plagiarismdetector is a tool you can use to check for plagiarism in your article, it also is using web scraping to compare your words with thousands of other websites.

- Another example that many companies are using web scraping for, is to create strategic marketing decisions after scraping social network profiles, to determine the posts with the most interactions.

Prerequisites

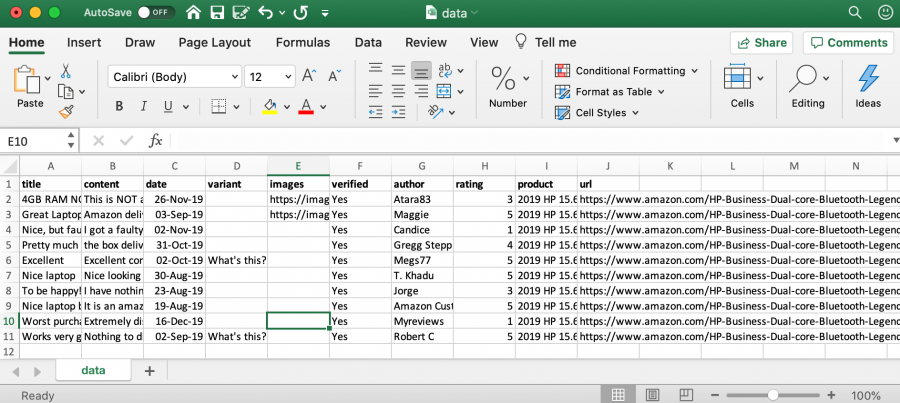

A simple amazon scraper to extract product details and prices from Amazon.com using Python Requests and Selectorlib. Full article at ScrapeHero Tutorials There are two simple scrapers in this project. Amazon Product Page Scraper amazon.py. Web Scraping with Python: Collecting More Data from the Modern Web Mitchell, Ryan on Amazon.com.FREE. shipping on qualifying offers. Web Scraping with Python: Collecting More Data from the Modern Web.

Before we dive right in, the reader would need to have the following:

- A good understanding of Python programming language.

- A basic understanding of HTML.

Now after having a brief about web scraping let’s talk about the most important thing, that is the “legal issues” surrounding the topic.

How to know if the website allows web scraping?

- You have to add “/robots.txt” to the URL, such as www.facebook.com/robots.txt, so that you can see the scraping rules (for the website) and see what is forbidden to scrap.

For example:

The rule above tells us that the site is doing a delay of 5 sec between the requests.

Another example:

On www.facebook.com/robots.txt you can find this rule listed above, it means that a Discord bot has the permission to do web scraping on Facebook videos.

- You can run the following Python code that makes a GET request to the website server:

If the result is a 200 then you have the permission to perform web scraping on the website, but you also have to take a look at the scraping rules.

As an example, if you run the following code:

If the result is a 200 then you have the permission to start crawling, but you must also be aware of the following Points:

- You can only scrape data that is available to the public, like the prices of a product, you can not scrape anything private, like a Sign In page.

- You can’t use the scraped data for any commercial purposes.

- Some websites provide an API to use for web scraping, like Amazon, you can find their APIhere.

As we know, Python has different libraries for different purposes.

In this tutorial, we are going to use Beautiful Soup4, urllib, requests, and plyer libraries.

For Windows users you can install it using the following command in your terminal:

For Linux users you can use:

You’re ready to go, let’s get started and learn a bit more on web scraping through two real-life projects.

Reddit Web Scraper

One year ago, I wanted to build a smart AI bot, I aimed to make it talk like a human, but I had a problem, I didn’t have a good dataset to train my bot on, so I decided to use posts and comments from REDDIT.

Here we will go through how to build the basics of the aforementioned app step by step, and we will use https://old.reddit.com/.

First of all, we imported the libraries we want to use in our code.

Requests library allows us to do GET, PUT,. requests to the website server, and the beautiful soup library is used for parsing a page then pulling out a specific item from it. We’ll see it in a practical example soon.

Second, the URL we are going to use is for the TOP posts on Reddit.

Third, the headers part with “User-Agent” is a browser-related method to not let the server know that you are a bot and restrict your requests number, to find out your “User-Agent” you can do a web search for “what is my User-Agent?” in your browser.

Finally, we did a get request to connect to that URL then to pull out the HTML code for that page using the Beautiful Soup library.

Now let’s move on to the next step of building our app:

Open this URL then press F12 to inspect the page, you will see the HTML code for it. To know in what line you can find the HTML code for the element you want to locate, you have to do a right-click on that element then click on inspect.

After doing the process above on the first title on the page, you can see the following code with a highlight for the tag that holds the data you right-clicked on:

Now let’s pull out every title on that page. You can see that there is a “div” that contains a table called siteTable, then the title is within it.

First, we have to search for that table, then get every “a” element in it that has a class “title”.

Now from each element, we will extract the text that is the title, then put every title in the dictionary before printing it.

After running our code you will see the following result, which is every title on that page:

Finally, you can do the same process for the comments and replies to build up a good dataset as mentioned before.

When it comes to web scraping, an API is the best solution that comes to the mind of most data scientists. APIs (Application Programming Interfaces) is an intermediary that allows one software to talk to another. In simple terms, you can ask the API for specific data by passing JSON to it and in return, it will also give you a JSON data format.

For example, Reddit has a publicly-documented API that can be utilized that you can find here.

Also, it is worth mentioning that certain websites contain XHTML or RSS feeds that can be parsed as XML (Extensible Markup Language). XML does not define the form of the page, it defines the content, and it’s free of any formatting constraints, so it will be much easier to scrape a website that is using XML.

For example, REDDIT provides RSS feeds that can be parsed as XML that you can find here.

Let’s build another app to better understand how web scraping works.

COVID-19 Desktop Notifer

Now, we are going to learn how to build a notification system for Covid-19 so we will be able to know the number of new cases and deaths within our country.

The data is taken from worldmeter website where you can find the COVID-19 real-time update for any country in the world.

Let’s get started by importing the libraries we are going to use:

Here we are using urllib to make requests, but feel free to use the request library that we used in the Reddit Web Scraper example above.

We are using the plyer package to show the notifications, and the time to make the next notification pop up after a time we set.

In the code above you can change US in the URL to the name of your country, and the urlopen is doing the same as opening the URL in your browser.

Now if we open this URL and scroll down to the UPDATES section, then right-click on the “new cases” and click on inspect, we will see the following HTML code for it:

We can see that the new cases and deaths part is within the “li” tag and “news_li” class, let’s write a code snippet to extract that data from it.

Web Scraping Amazon Using Python Github

After pulling out the HTML code from the page and searching for the tag and class we talked about, we are taking the strong element that contain in the first part the new cases number, and in the second part the new deaths number by using “next siblings”.

In the last part of our code, we are making an infinite while loop that uses the data we pulled out before, to show it in a notification pop up.The delay time before the next notification will pop up is set to 20 seconds which you can change to whatever you want.

After running our code you will see the following notification in the right-hand corner of your desktop.

Conclusion

We’ve just proven that anything on the web can be scraped and stored, there are a lot of reasons why we would want to use that information, as an example:

Imagine you are working with a social media platform, and you have a task that is deleting any posts that may be against the community, the best way of doing that task is by developing a web scraper application that scrapes and stores the likes and comments number for every post, after that if the post received a lot of comments but without any like, we can deduce, that this particular post may be striking a chord in people and we should take a look at it.

Your free and accurate online printable Ruler! Printable-Ruler.net provides you with an easy to use, free printable online ruler that will save you to ever look for a ruler again! For Letter as well as A4 sized paper, inches as well as centimeters. Free ruler image.

There are a lot of possibilities, and it’s up to you (as a developer) to choose how you will use that information.

About the author

Ahmad MardeniAhmad is a passionate software developer, an avid researcher, and a business man. He began his journey to be a cybersecurity expert two years ago. Also he participated in a lot of hackathons and programming competitions. As he says “Knowledge is power” so he wants to deliver good content by being a technical writer.

Python Web Scraping Sample

Wouldn’t it be great if you could build your own FREE API to get product reviews from Amazon? That’s exactly what you will be able to do once you follow this tutorial using Python Flask, Selectorlib and Requests.

What can you do with the Amazon Product Review API?

An API lets you automatically gather data and process it. Some of the uses of this API could be:

- Getting the Amazon Product Review Summary in real-time

- Creating a Web app or Mobile Application to embed reviews from your Amazon products

- Integrating Amazon reviews into your Shopify store, Woocommerce or any other eCommerce store

- Monitoring reviews for competitor products in real-time

The possibilities for automation using an API are endless so let’s get started.

Why build your own API?

You must be wondering if Amazon provides an API to get product reviews and why you need to build your own.

APIs provided by companies are usually limited and Amazon is no exception. Tapback messages. They no longer allow you to get a full list of customers reviews for a product on Amazon through their Product Advertising API. Instead, they provide an iframe which renders the reviews from their web servers – which isn’t really useful if you need the full reviews.

How to Get Started

In this tutorial, we will build a basic API to scrape Amazon product reviews using Python and get data in real-time with all fields, that the Amazon Product API does not provide.

We will use the API we build as part of this exercise to extract the following attributes from a product review page. (https://www.amazon.com/Nike-Womens-Reax-Running-Shoes/product-reviews/B07ZPL752N/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews)

- Product Name

- Number of Reviews

- Average Rating

- Rating Histogram

- Reviews

- Author

- Rating

- Title

- Content

- Posted Date

- Variant

- Verified Purchase

- Number of People Found Helpful

Installing the required packages for running this Web Scraper API

Adobe flash firefox enable. We will use Python 3 to build this API. You just need to install Python 3 from Python’s Website.

We need a few python packages to setup this real-time API

- Python Flask, a lightweight server will be our API server. We will send our API requests to Flask, which will then scrape the web and respond back with the scraped data as JSON

- Python Requests, to download Amazon product review pages’ HTML

- Selectorlib, a free web scraper tool to markup data that we want to download

Install all these packages them using pip3 in one command:

The Code

You can get all the code used in this tutorial from Github – https://github.com/scrapehero-code/amazon-review-api

In a folder called amazon-review-api, let’s create a file called app.py with the code below.

Here is what the code below does:

- Creates a web server to accept requests

- Downloads a URL and extracts the data using the Selectorlib template

- Formats the data

- Sends data as JSON back to requester

Free web scraper tool – Selectorlib

You will notice in the code above that we used a file called selectors.yml. This file is what makes this tutorial so easy to scrape Amazon reviews. The magic behind this file is a tool called Selectorlib.

Selectorlib is a powerful and easy to use tool that makes selecting, marking up, and extracting data from web pages visual and simple. The Selectorlib Chrome Extension lets you mark data that you need to extract, and creates the CSS Selectors or XPaths needed to extract that data, then previews how the data would look like. You can learn more about Selectorlib and how to use it here

If you just need the data we have shown above, you don’t need to use Selectorlib because we have done that for you already and generated a simple “template” that you can just use. However, if you want to add a new field, you can use Selectorlib to add that field to the template.

Here is how we marked up the fields in the code for all the data we need from Amazon Product Reviews Page using Selectorlib Chrome Extension.

Once you have created the template, click on ‘Highlight’ to highlight and preview all of your selectors. Finally, click on ‘Export’ and download the YAML file and that file is the selectors.yml file.

Here is how our selectors.yml looks like

You need to put this selectors.yml in the same folder as your app.py

Running the Web Scraping API

To run the flask API, type and run the following commands into a terminal:

Then you can test the API by opening the following link in a browser or using any programming language.

Your response should be similar to this:

This API should work for to scrape Amazon reviews for your personal projects. You can also deploy it to a server if you prefer.

However, if you want to scrape websites for thousands of pages, learn about the challenges here Scalable Large Scale Web Scraping – How to build, maintain and run scrapers. If you need help your web scraping projects or need a custom API you can contact us.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data